Start your journey with Alteryx Machine Learning - Take our Interactive Lesson today!

Alteryx Machine Learning Discussions

Find answers, ask questions, and share expertise about Alteryx Machine Learning.Getting Started

Start your learning journey with Alteryx Machine Learning Interactive Lessons

Go to Lessons- Community

- :

- Community

- :

- Participate

- :

- Discussions

- :

- Machine Learning

- :

- For Each Loop For A Prediction Model

For Each Loop For A Prediction Model

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Is there a way to create a for-each loop for a time series model? For instance, filtering the transactions for each product and sending specific product transactions to a time series model, and finally combining all predictions for all products.

Solved! Go to Solution.

- Labels:

-

General Feedback

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello,

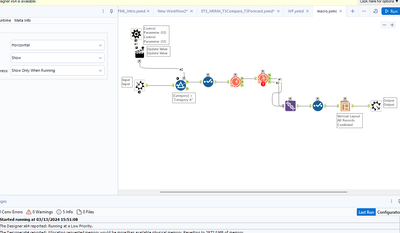

You absolutely could. The answer, as always, will depend on what tools you are using to work on this but I put a quick example together for you that illustrates the point using the R tools. If you are using Alteryx Machine Learning on the cloud the same concept applies but rather than using the TS Predict tool you would use the ML Predict tool.

In order to accomplish what you are describing here and are using the same model for the different categories there are a couple of ways to tackle

1. You could filter out each dataset and essentially create a new path for each category with its own score tool for each path. This is the easiest way but it can get very convoluted and complex fast

2. A better way is to use a Batch macro using each category as the variable in the control parameter

I have attached a working example - not the most efficient way by any means but it proves the concept. In the example there are two categories and the output of the batch macro are both charts stacked one on top of the other

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Also, it would be good to evaluate which category of products could assess different data series models to see if you can achieve better accuracy.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Take a look at the TS Model Factory. Disclaimer: I haven't used it in several years, but is what you're after.

It will have a similar effect as batching but work much much quicker. These results will help to inform your batches as well. One of the most common issues when people start going down the simple batching route is individual batches not having enough data to really predict and so it may make sense to "combine batches".

https://community.alteryx.com/t5/Community-Gallery/TS-Factory-Sample/ta-p/878594

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

-

Academy

1 -

App Builder

3 -

Bugs & Issues

18 -

Data

20 -

Enhancement

9 -

General Feedback

17 -

Question

54 -

Resource

22 -

Use Case Support

33